At some point, every growth or performance marketer faces this dilemma.

You want to run A/B tests. You know testing improves conversion rates, landing pages, and long term revenue. But your paid ads are already expensive. Budgets are tight. Every dip in performance gets noticed. And the fear is real.

What if testing hurts my ads?

What if conversion rate drops and algorithms punish me?

What if I waste spend while experimenting?

This fear is not irrational. Poorly planned A/B tests can absolutely damage paid ads performance. But avoiding testing altogether is far more dangerous.

The truth is simple. Brands that scale sustainably learn how to test without destabilizing acquisition. Brands that avoid testing end up optimizing ads harder and harder while the website stays weak.

This blog is a practical guide to running A/B tests safely, especially when paid ads are a major growth channel. We will break down how to structure A/B tests, how to protect ad performance, how to test landing pages and creatives responsibly, and which tools and frameworks help minimize risk.

Throughout the article, we will also touch on how a conversion rate optimization company like CustomFit.ai supports this process by helping teams test in controlled ways using an A/B Testing Platform, A/B testing software, and A/B testing tools built for ecommerce and D2C brands.

This is not about reckless experimentation. It is about disciplined testing that improves performance instead of threatening it.

Paid ads operate on fragile systems.

Algorithms optimize based on conversion signals. Small fluctuations can affect learning phases, delivery, and costs. When something changes suddenly, performance marketers feel it immediately.

A/B testing, on the other hand, introduces variation by design.

When these two worlds collide without planning, problems happen.

Conversion rate drops temporarily

Cost per acquisition increases

Learning phases reset

Stakeholders panic

This is why many teams either avoid testing or test too aggressively.

The solution is not to stop testing. The solution is to test intelligently.

The first mindset shift is this.

You do not test everything at once. And you do not test on all traffic.

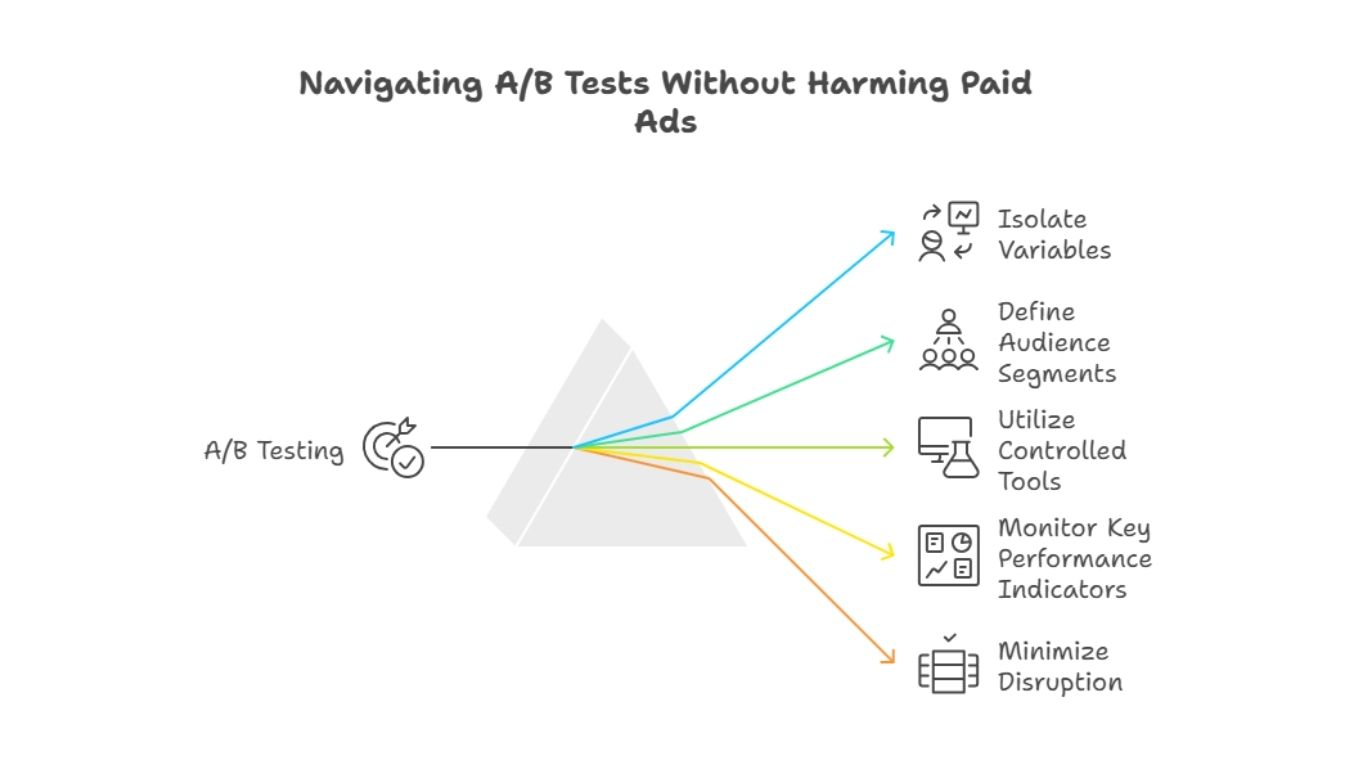

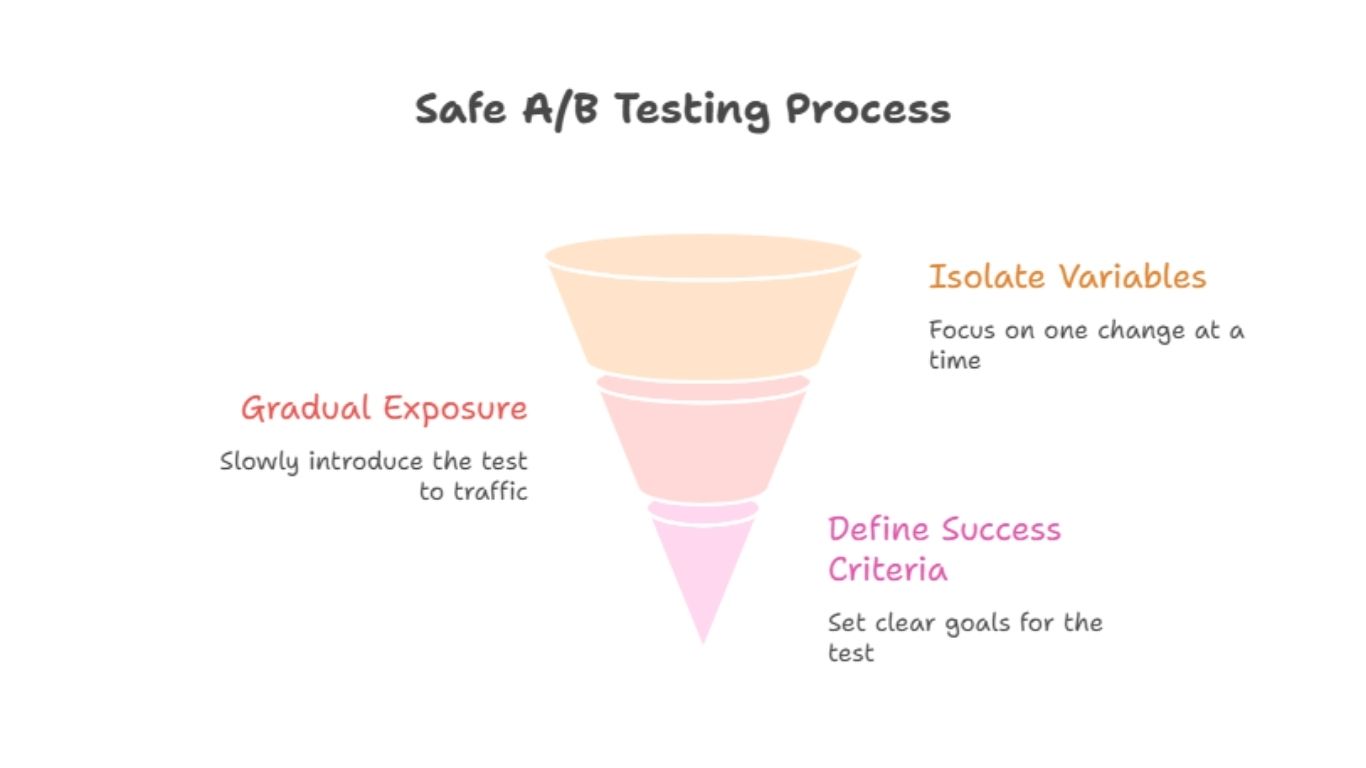

Safe A/B testing follows three principles.

Isolation

Gradual exposure

Clear success criteria

When you isolate variables, limit exposure, and define guardrails, A/B tests stop being risky and start being predictable.

Let us break this down step by step.

One of the biggest mistakes teams make is mixing concerns.

They blame ads when the website changes. Or blame the website when ad creatives change. This creates confusion and fear.

Before testing, separate these layers mentally and operationally.

Ad performance depends on targeting, creative, bidding, and platform signals.

Website performance depends on messaging, layout, trust, and usability.

When you test website changes, your goal is to improve how traffic converts, not how ads deliver.

This distinction matters because it influences how you structure tests.

Risk does not come from testing itself. It comes from uncontrolled testing.

Here are the core strategies that protect paid performance.

Test on a subset of traffic

Avoid touching conversion tracking logic

Do not change too many variables at once

Set performance guardrails

Monitor leading indicators

When teams follow these principles, testing becomes a safety net instead of a threat.

Traffic splitting is one of the most misunderstood aspects of A/B testing.

Many teams assume that running an A/B test means half of paid traffic will see an unproven version. That is not true.

Start with 10 to 20 percent of traffic for the variant

Keep 80 to 90 percent on the control

Scale exposure only after early validation

Modern A/B Testing Platforms allow precise traffic allocation so you are never forced into a risky 50-50 split.

This approach ensures that even if a test underperforms, the majority of paid traffic remains protected.

Landing pages are often the biggest lever for improving paid performance. They are also the biggest risk when changed recklessly.

Conversion rate drops temporarily

Algorithms interpret lower conversion quality

Costs increase before learning stabilizes

Test messaging before layout

Avoid drastic design changes initially

Keep page load speed constant

Maintain the same primary conversion event

For example, test headline clarity or trust signals before redesigning the entire page.

Using an A/B testing tool like CustomFit.ai allows teams to run visual tests without changing URLs, tracking, or ad destinations, which keeps ad platforms stable.

One of the safest ways to test without hurting ads is segmentation.

Instead of testing across all paid traffic, isolate specific segments.

New visitors only

Returning visitors only

Organic traffic before paid traffic

Specific campaign or ad set

By testing on lower-risk segments first, you gain confidence before exposing high-cost paid traffic.

Segmentation also improves learning quality because different audiences behave differently.

Display ads and programmatic placements can burn budget quickly if tested poorly.

Test creatives within the same campaign

Keep budgets capped tightly

Run tests for shorter, defined windows

Pause losing variants early

Avoid launching multiple new creatives at once. Instead, compare one controlled variable such as imagery or headline.

This keeps budget waste low while still producing learning.

Search ads are intent-driven. Even small wording changes can impact performance.

Use platform-native experiments where possible

Test one headline or description at a time

Keep bidding and targeting constant

Avoid testing during peak sales periods

Search platforms often provide built-in experiment tools that isolate changes and protect baseline performance.

When paired with landing page A/B Testing, search copy tests become even more powerful.

Social platforms like Facebook and Instagram reward consistency. Abrupt changes can reset learning.

Duplicate the existing ad set

Introduce one creative change only

Limit budget for the test ad set

Run both in parallel

Monitor cost per result and conversion quality

Avoid editing live ads that are performing well. Instead, create controlled experiments alongside them.

This approach preserves performance while allowing creative exploration.

Safe testing depends heavily on tooling.

The best tools share these characteristics.

Ability to limit traffic exposure

Clear performance reporting

Fast rollback options

Minimal performance overhead

An A/B Testing Platform that integrates smoothly with ecommerce sites allows teams to test landing pages without touching ad setup.

CustomFit.ai, for example, enables controlled website experimentation while keeping ad destinations stable, which reduces risk to paid campaigns.

You can do A/B testing on CTA button using Customfit App which is compatible with Shopify, Shoplazza, Shopline, Woocommerce, Wordpress, Magento, Bigcommerce, Custom coded website, Salesforce commerce cloud and many more.

Scaling ads is often when teams stop testing. This is a mistake.

Scaling increases the importance of conversion optimization because every small gain multiplies.

Avoid major structural changes

Focus on micro improvements

Use smaller traffic splits

Test during stable performance windows

When done right, A/B Testing improves scalability instead of slowing it down.

Paid ads do not fail because targeting is wrong. They fail because landing experiences are weak.

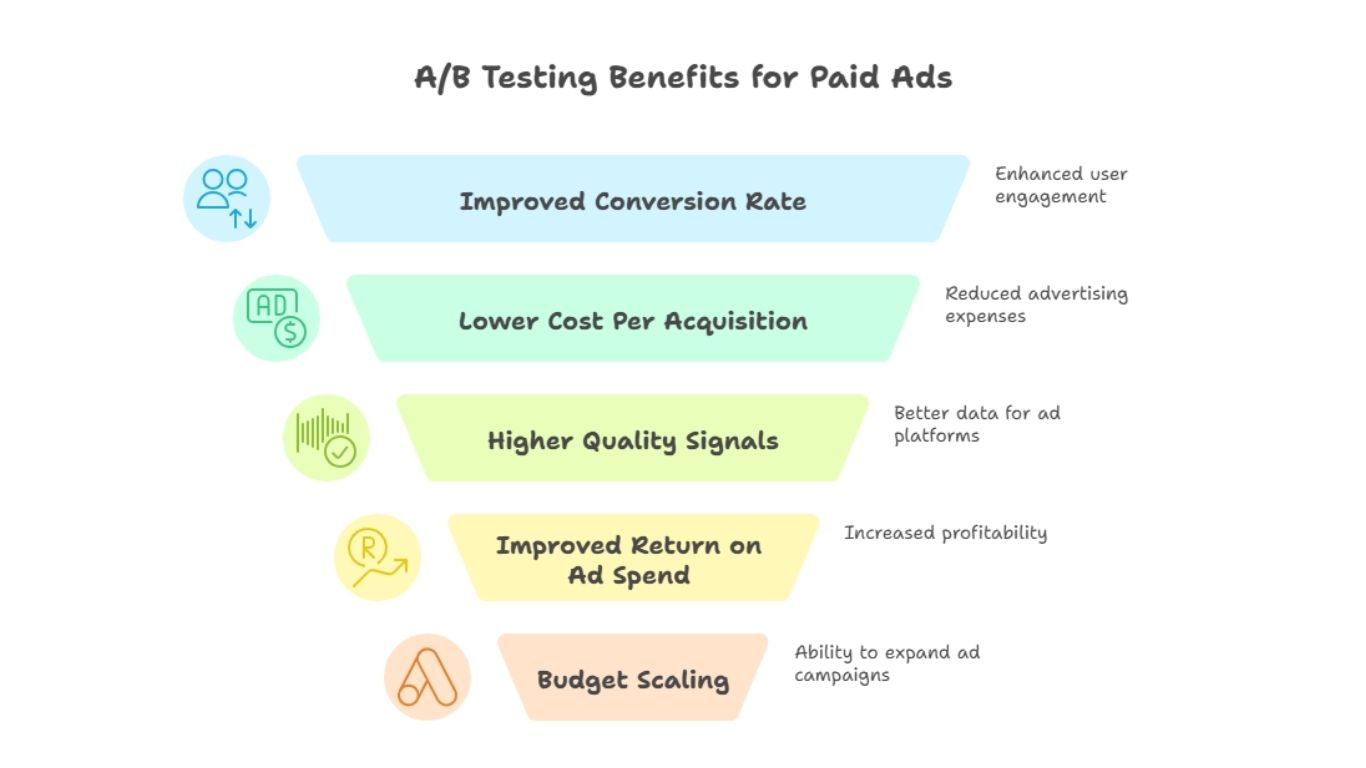

Better conversion rate leads to:

Lower cost per acquisition

Higher quality signals to ad platforms

Improved return on ad spend

More room to scale budgets

Testing is not an enemy of paid ads. It is a long term ally.

The key is patience and discipline.

A conversion rate optimization company brings structure to experimentation.

Instead of random tests, they focus on:

Clear hypotheses

Incremental changes

Business aligned metrics

Risk management

CustomFit.ai supports this approach by helping brands test and personalize experiences without destabilizing acquisition.

This makes experimentation safer for teams that rely heavily on paid traffic.

The most mature teams integrate ads and experimentation.

They do not treat them as separate silos.

Ads drive intent

Website tests improve fulfillment of intent

Learnings inform future creatives

Performance compounds over time

This creates a feedback loop where ads and website optimization strengthen each other.

Avoid these pitfalls.

Testing too many changes at once

Exposing all paid traffic to unproven variants

Changing conversion events mid-test

Reacting too early to short-term data

Testing during peak sales days

Most testing failures are process failures, not tool failures.

Not every test needs to run to statistical perfection.

You should define guardrails before launching.

For example:

Pause if conversion rate drops beyond a threshold

Scale if early signals show improvement without harming CPA

Extend tests if data is inconclusive

This prevents emotional decisions and protects budgets.

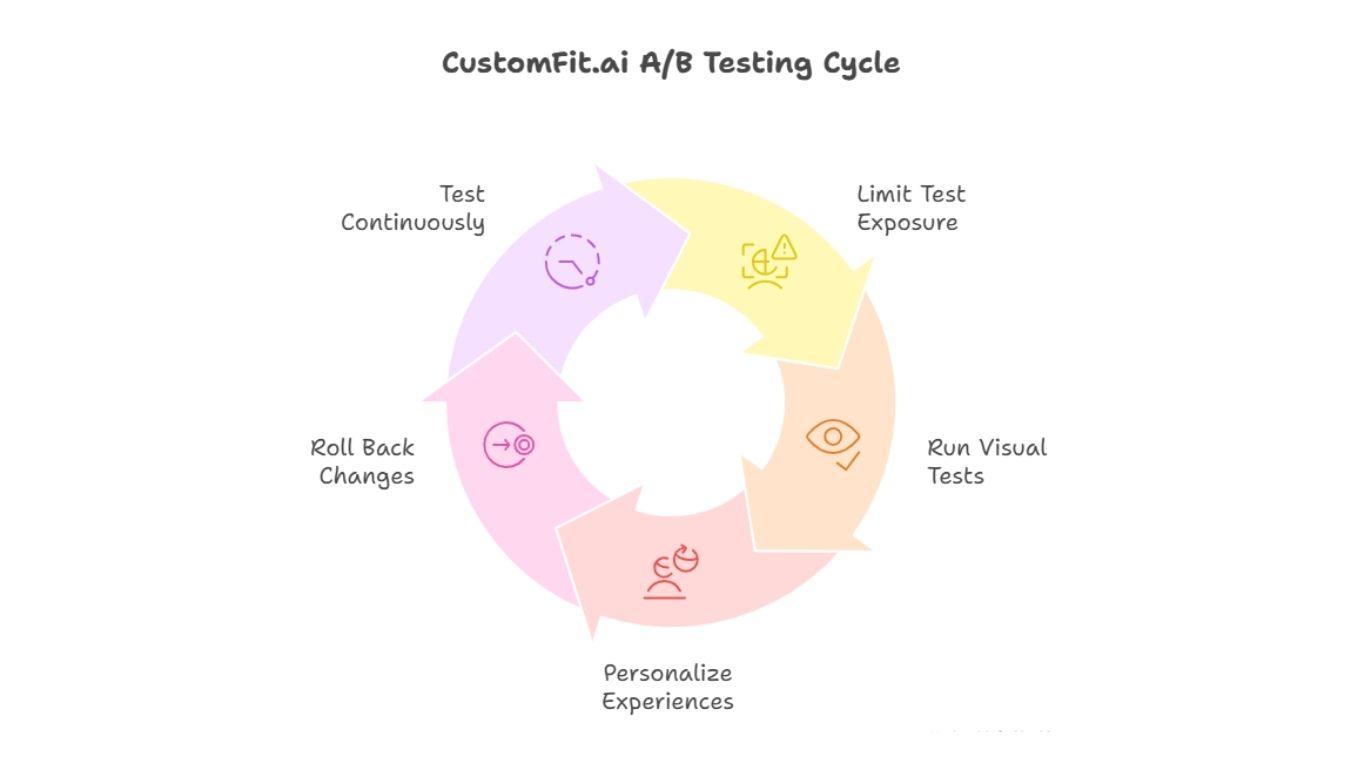

CustomFit.ai is a conversion rate optimization company focused on safe, controlled experimentation for ecommerce and D2C brands.

It helps teams:

Limit test exposure to small traffic segments

Run visual A/B tests without code changes

Personalize experiences without changing ad destinations

Roll back changes instantly if performance dips

This approach allows teams to test continuously without fearing paid performance collapse.

The biggest benefit of safe A/B testing is cultural.

Teams stop fearing change.

Stakeholders trust the process.

Learning becomes systematic.

Growth becomes predictable.

By treating A/B Testing as a disciplined practice instead of a gamble, brands unlock sustainable performance gains.

Paid ads and A/B Testing do not have to be enemies.

When testing is structured, controlled, and measured properly, it strengthens paid performance instead of weakening it.

The brands that win are not those who avoid testing. They are the ones who learn how to test without panic.

By isolating variables, limiting exposure, segmenting audiences, and using the right A/B Testing Platform and A/B testing tools, you can improve conversion rate while protecting ad spend.

Tools like CustomFit.ai help make this process safer, but the mindset matters most.

Testing is not about risking performance. It is about protecting it long term.

You can run A/B tests safely by limiting traffic exposure, isolating variables, setting performance guardrails, and testing on segments before full rollout.

The best tools allow controlled traffic splits, fast rollbacks, and minimal performance overhead. A dedicated A/B Testing Platform is ideal for this.

Yes. By duplicating ad sets, limiting budgets, and testing one variable at a time, you can safely test Facebook ads without destabilizing performance.

Start with a small percentage of traffic for variants and scale only after early validation. Avoid exposing all paid traffic at once.

They can if done poorly. When structured correctly and run through controlled tools, landing page A/B tests often improve paid performance over time.

A/B Testing improves conversion rate, which leads to better return on ad spend, lower acquisition costs, and stronger scaling potential.

CustomFit.ai helps brands run controlled website experiments, personalize experiences, and protect paid performance through careful traffic management and measurement.