You click “publish” on a new landing page, step back, and wait. Some traffic comes in. A few conversions trickle through. But deep down, you’re left wondering: “Could that headline be stronger? Is the call-to-action positioned too far down the page? Would a different image work better?”

This is where A/B testing steps in—not as a crystal ball, but as a flashlight. It doesn’t predict the future. It highlights what is effective and what is not. And it does so without relying on gut feeling.

If you’ve been hearing about A/B testing but still feel fuzzy on how it works, how to do it, and what to test, this guide is for you.

At its core, A/B testing (also called split testing) is a method of comparing two versions of a webpage or element—like a headline, button, or image—to see which performs better.

Suppose you have two different versions of a product page:

You split your traffic 50/50 between these two versions and track which version drives more purchases. Whichever wins, wins.

That’s it. That’s A/B testing in its simplest form.

But behind that simplicity is something powerful: you’re making decisions based on actual user behavior, not guesses. You’re learning what your audience prefers, not what a design blog says they should.

Because most decisions online happen fast. People don’t scroll and analyze. They react, often subconsciously.

A headline that seems "fine" could actually be detrimental to conversion rates. A form with one extra field might be turning people away.

Without testing, these problems remain invisible.

With testing, they become solvable.

And here’s the thing: you don’t need to overhaul your entire website. Just changing one element at a time can teach you more than a dozen user surveys.

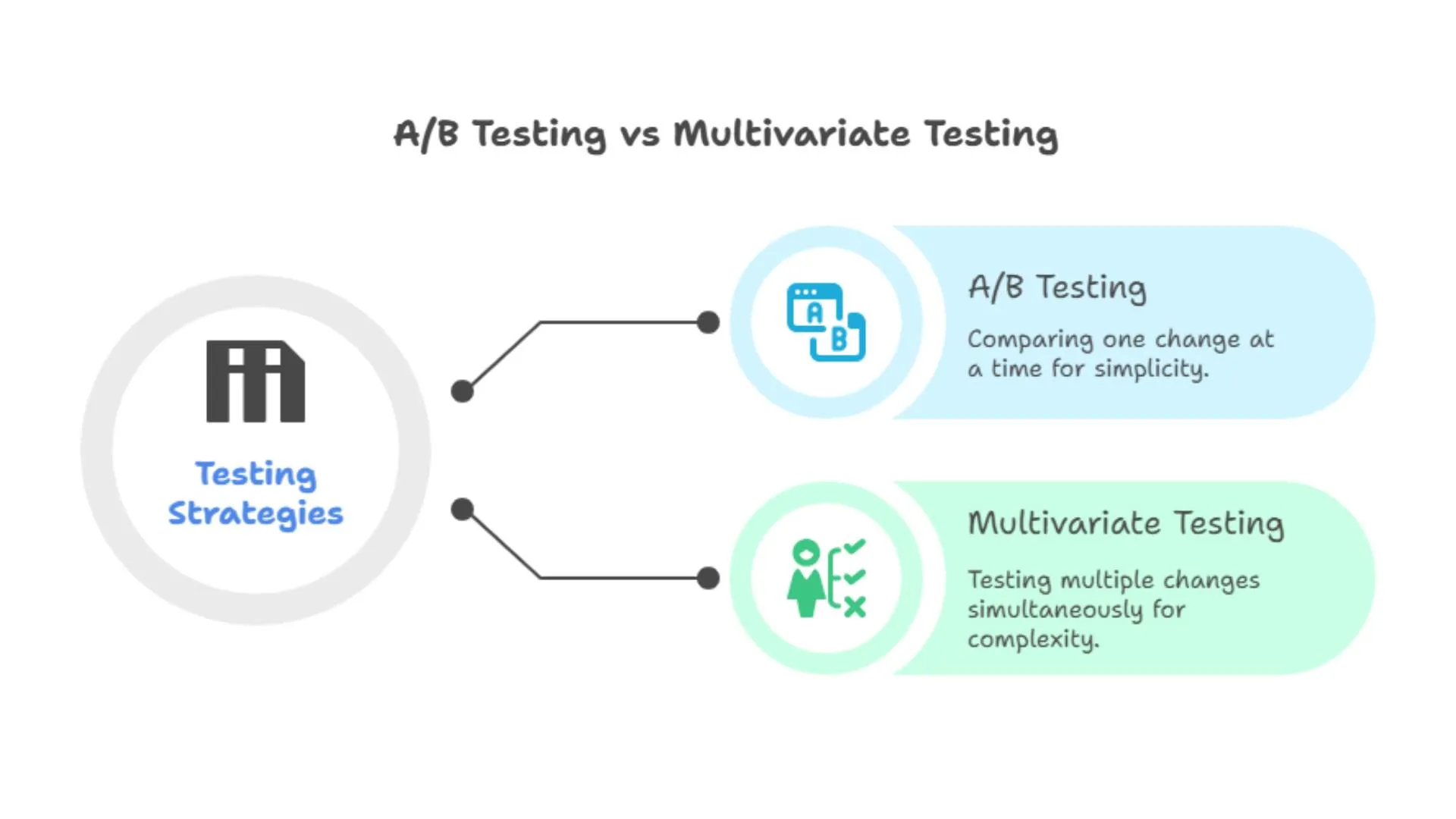

Before we go further, a quick note on something that often confuses people.

While multivariate testing is powerful, it’s also more complex and requires more traffic to get meaningful results. A/B testing is simpler, easier to start with, and better for most teams.

If you're new to A/B testing, it may seem a bit technical. But the basic process is repeatable and straightforward.

Start with a goal. That could be:

No need to test for the sake of testing. Start with something that matters.

This is the “educated guess” part.

Example:

Changing the call-to-action button text from ‘Submit’ to ‘Get My Free Guide’ will lead to an increase in downloads."

Good hypotheses are clear and tied to behavior, not just design preferences.

Now, build version B of your page. It should be identical except for one change, so you know exactly what caused the result.

That change could be:

Your A/B testing platform (we’ll get to that in a bit) will divide traffic between both versions. The split is usually 50/50 for clean results.

Make sure each user sees only one version—consistency matters.

This is where many tests go wrong. You can’t trust results after a few hours or a few dozen visitors.

Let it run for at least 1–2 weeks or until you have statistically significant data.

Look at the data. Which version drove more of your goal action? Apply it to your site.

And then? Start again. Small test after small test leads to big wins.

Honestly, almost anything. But not everything at once.

Here are some high-impact places to start:

If you’re unsure what to test first, start where your traffic is highest or where drop-off is biggest.

This is where platforms like CustomFit.ai come in.

You need a tool that helps you:

CustomFit.ai is designed to help marketers experiment and personalize content on their own terms. No code. No wait. No guesswork. While it is not the only option available, it is among the easiest to use.And that’s half the battle.

Other significant A/B testing platforms include Optimizely, VWO, and Google Optimize, which was discontinued in 2023. Some are better for enterprise. Some require more tech setup. Choose what fits your stage and stack.

Let’s look at a few real-world scenarios:

Test:

“Submit” vs. “Get My Free Ebook”

Result:

The more descriptive, benefit-driven version led to a 28% increase in signups.

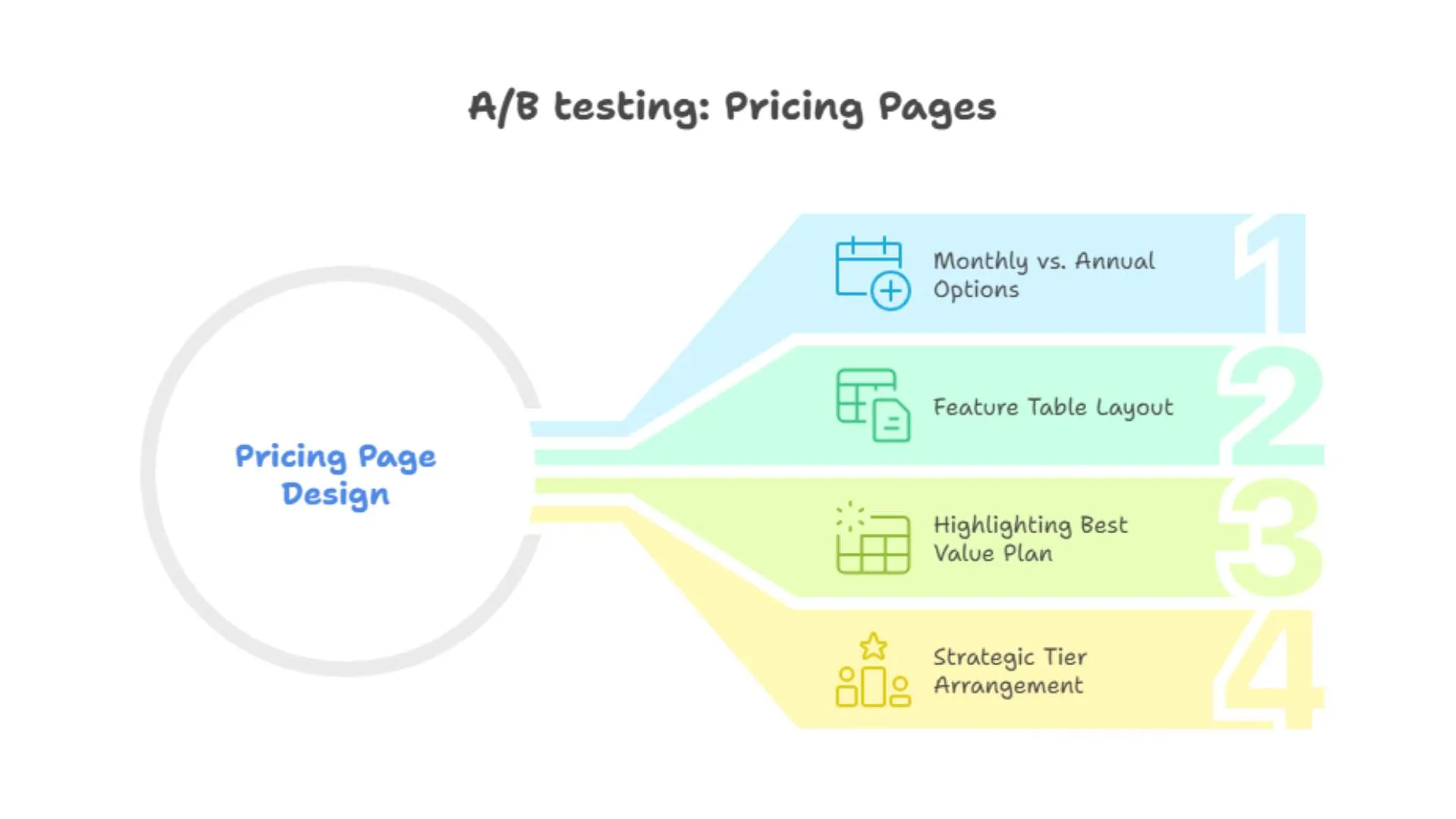

Test:

No badge vs. “Most Popular” badge on the mid-tier plan

Result:

Users gravitated toward the highlighted plan, increasing its conversion by 20%.

Test:

“Build Websites Faster” vs. “Build Beautiful Websites Without Code”

Result:

The second headline, with a clearer benefit and value, improved signups by 16%.

Test:

Pop-up on page load vs. pop-up after 30 seconds

Result:

The delayed pop-up saw better engagement and lower bounce rates.

One of the most common fears is: “Will A/B testing hurt my SEO?”

Short answer: No—if done right.

Google supports A/B testing. What they discourage is:

A good A/B testing platform like CustomFit.ai ensures all variants are crawlable and performs fast, so your SEO remains safe.

You can even use A/B testing to improve SEO: try different meta titles, headlines, or content layouts to see what keeps users engaged longer.

A/B testing isn’t a one-time project. It’s a mindset. A culture.

You don’t need to change everything. You just need to keep learning.

One test per week. One insight per iteration. That’s how you turn a decent site into a great one.

The best teams don’t just guess, they test. And the best tools don’t slow them down, they help them move faster.

If you’re ready to make your site better one decision at a time, testing is the way. And platforms like CustomFit.ai can help you start today, not next quarter.

A: A/B testing is a method of comparing two versions of a webpage or element to see which one performs better based on a specific goal—like clicks, signups, or purchases.

A: Because it removes guesswork. Instead of assuming what works, you test it with real users and let the data decide. This helps improve conversions, user experience, and even SEO.

A: That depends on your needs. For marketers who want a fast, no-code experience with personalization, CustomFit.ai is a top contender. Additional options to consider are Optimizely, VWO, and Adobe Target.

A: Not if done correctly. Make sure your test tool doesn’t block Google from crawling content, and avoid using redirects or cloaking. Tools like CustomFit.ai are built with SEO safety in mind.

A: Usually at least 1–2 weeks, or until you have enough traffic for statistically valid results. Completing a test prematurely can result in inaccurate conclusions.

A: Yes! With tools like CustomFit.ai, you can visually create test variants without writing code. This lets marketers and growth teams test and learn faster.

Whether you're optimizing your landing pages, forms, or checkout flows, A/B testing is your best bet for making smart, data-backed decisions. And when paired with a clean, intuitive platform like CustomFit.ai, you’re free to test, learn, and grow, without tech bottlenecks.